- >

- BPM Software>

- How Process Owners Can Design Processes That Work Even When Tools Keep Changing

How Process Owners Can Design Processes That Work Even When Tools Keep Changing

Last year's CRM implementation hasn't even fully stabilized, and already leadership is evaluating alternatives. The finance team just migrated to a new ERP, and rumors suggest another change is coming. Every time you turn around, another application gets replaced, upgraded, or deprecated.

For process owners, this constant technology churn creates operational chaos. Workflows carefully designed around specific tool capabilities break when those tools change. Integrations built over months become obsolete when connected systems get replaced. And the process documentation you finally completed references systems that no longer exist.

The only sustainable response is designing tool-agnostic workflows, processes that define business logic independently from the technology that implements them. When tools change, process stability is maintained because the core design wasn't dependent on specific tool characteristics.

Understanding how to create a flexible workflow design that accommodates technology evolution has become essential for process owners who can't afford to rebuild workflows every time the technology landscape shifts.

Why tools keep changing

Before addressing the solution, it's worth understanding why technology environments are so unstable. This context informs design decisions that improve resilience.

Vendor dynamics

The SaaS market experiences constant consolidation, disruption, and evolution. Vendors get acquired, changing product direction. Competitors introduce capabilities that make current tools obsolete. Pricing changes force reconsideration of previously settled decisions.

Organizations can't control these vendor dynamics. They can only design processes that accommodate inevitable change.

Organizational evolution

As companies grow, their technology needs evolve. Tools appropriate for a 100-person company may not scale to 1,000. Solutions that serve regional operations may not work for global expansion. Technology that fit yesterday's business model may not suit tomorrow's strategy.

Even without external pressure, internal evolution drives technology change.

Technology advancement

Technology capabilities advance continuously. What required custom development yesterday becomes commodity functionality today. Tools that were best-of-breed become mediocre as alternatives emerge. Standing still means falling behind.

Organizations that want to leverage technology advancement must accept the change that comes with it.

User expectations

As consumer technology improves, employee expectations for business tools increase accordingly. Clunky interfaces that users tolerated previously become unacceptable when their personal devices provide superior experiences. User pressure drives tool replacement even when functionality remains adequate.

Research indicates that the average enterprise now manages 106 SaaS applications, with change happening continuously as applications are added, replaced, and deprecated. In a 2024 survey, mid-sized companies saw a 29% reduction in the number of SaaS apps, indicating significant consolidation and tool replacement activity.

Principles of tool-agnostic workflow design

Process stability strategies that survive technology change share design characteristics that separate business logic from implementation specifics.

Separate what from how

The most fundamental principle distinguishes what a process accomplishes from how specific tools accomplish it. The what should remain stable across tool changes. The how can vary without affecting process integrity.

For example, a process might require "notify approvers of pending items." That's the what. Whether notification happens through email, Slack, in-app alerts, or some future communication technology is the how. Design processes around the what, treating the how as implementation details that tools provide.

Abstract tool-specific capabilities

When processes depend on specific tool capabilities, they become vulnerable when those capabilities change. Process stability requires abstracting tool-specific features into generic capabilities that multiple tools could provide.

Rather than designing around "Salesforce opportunity stages," design around "sales pipeline milestones." Rather than depending on "ServiceNow incident categories," depend on "support request classifications." This abstraction enables tool replacement without process redesign.

Define clear interfaces

Where processes must interact with tools, define interfaces that specify what information transfers and what actions occur, without specifying how tools implement those interactions.

Clear interface definitions enable tools to be replaced without affecting processes, as long as replacement tools can satisfy the interface requirements. They also make integration requirements explicit, simplifying tool evaluation and transition planning.

Embed business rules in process logic, not tool configuration

Many tools enable configuring business rules within their interfaces. While convenient, this approach ties business logic to specific tools. When tools change, that logic must be recreated.

Tool-agnostic design embeds business rules in process logic that remains independent of any particular tool. Tools execute decisions based on instructions from the process layer rather than internally configured rules.

Flexible workflow design patterns

Translating tool-agnostic principles into practical designs requires specific patterns.

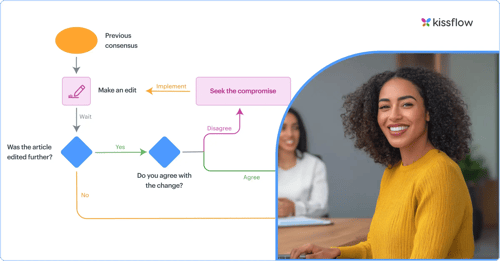

Adapter pattern

The adapter pattern inserts a translation layer between processes and tools. Processes communicate with adapters that speak the process's language. Adapters translate those communications into tool-specific interactions.

When tools change, only adapters require modification. Process logic remains unchanged. This pattern localizes tool dependency, reducing change impact.

Event-driven decoupling

Event-driven architectures decouple processes from tools through asynchronous event communication. Processes publish events describing what happened. Tools subscribe to relevant events and respond according to their capabilities.

This decoupling means processes don't need to know which tools are listening. Tools can be added, replaced, or removed without process modification, as long as event handling continues.

State machine process models

State machine models define processes as transitions between discrete states, with transitions triggered by conditions rather than tool interactions. This approach keeps business logic in the state machine while tools handle mechanics that enable state transitions.

State machines prove particularly resilient because they define processes in business terms that remain meaningful regardless of implementation technology.

Capability-based requirements

Rather than specifying which tools processes use, specify which capabilities processes require. A process might require "document storage with version control" rather than "SharePoint." This capability-based approach enables tool substitution without process redesign.

According to Gartner, by 2028, 60% of software development organizations will adopt low-code platforms as their primary development platform. These bpm platforms provide the abstraction layers necessary for tool-agnostic design, making process stability more achievable than traditional development approaches.

Managing tool transitions

Even well-designed tool-agnostic workflows require careful management during tool transitions.

Impact assessment

Before any tool change, assess process impacts systematically. Which processes interact with the changing tool? What interfaces need adjustment? What capabilities might be affected?

This assessment prevents surprise disruptions and enables proactive planning.

Parallel operation

Where possible, operate old and new tools simultaneously during transitions. This parallel period enables testing, comparison, and gradual migration without operational disruption.

Parallel operation requires additional infrastructure and effort but dramatically reduces transition risk.

Interface validation

Before completing transitions, validate that new tools satisfy interface requirements that processes depend on. This validation catches capability gaps before they affect operations.

Staged rollout

Transition processes gradually rather than all at once. Start with lower-risk processes, learning from each transition before progressing to more critical workflows. This staged approach contains problems when they occur and builds organizational capability for subsequent transitions.

Documentation currency

Tool transitions often reveal documentation gaps. Use transitions as opportunities to verify and update process documentation, ensuring it reflects current reality rather than historical assumptions.

Building organizational capability for tool agnosticism

Technical design patterns matter, but organizational capabilities determine whether organizations actually achieve process stability across tool changes.

Process architecture function

Organizations that successfully maintain process stability typically establish process architecture functions responsible for designing tool-agnostic processes and maintaining them through technology evolution.

These functions may sit within IT, operations, or as independent capabilities. Regardless of location, they need mandate and resources to maintain process integrity.

Integration platform investment

Tool-agnostic design depends on integration capabilities that manage tool connections centrally. Investment in integration platforms pays ongoing dividends through reduced tool change costs.

Change management processes

Tool transitions require change management that addresses both technical and human dimensions. Organizations need established processes for evaluating tool changes, planning transitions, executing migrations, and supporting affected teams.

Continuous architecture review

Tool-agnostic design requires ongoing attention. As tools evolve and processes change, architectural integrity can degrade. Regular review ensures that design principles remain applied and technical debt gets addressed before it undermines process stability.

McKinsey's research found that only 21% of companies have meaningfully re-engineered their processes for modern technology environments. Organizations that establish tool-agnostic capabilities position themselves in this leading minority.

Measuring process stability

Track metrics that reveal whether process designs actually withstand tool changes.

Tool change impact

When tools change, how significantly do processes require modification? Decreasing modification requirements indicate improving tool agnosticism.

Transition velocity

How quickly can the organization complete tool transitions affecting a given process? Faster transitions suggest better prepared process designs.

Operational continuity

During tool transitions, how much operational disruption occurs? Improving continuity indicates that tool-agnostic design is protecting operations from technology change.

Documentation accuracy

Following tool transitions, how accurately does documentation reflect new configurations? Better accuracy suggests documentation approaches that survive transitions effectively.

Total cost of tool change

What is the complete cost of tool transitions, including planning, implementation, and remediation? Declining costs indicate organizational capability improvement.

The business case for tool-agnostic design

Investing in tool-agnostic design requires justification. Several value drivers support the investment.

Reduced transition costs

Every tool change becomes less expensive when processes are designed for change resilience. Given typical technology evolution rates, these savings accumulate substantially over time.

Faster transition execution

Tool-agnostic processes enable faster transitions, reducing the period of parallel operation and uncertainty that tool changes create.

Improved technology agility

When tool changes are manageable, organizations can adopt better technology more readily. This agility enables capturing benefits from technology improvement that tool-dependent processes would forgo.

Lower vendor lock-in

Tool-agnostic design reduces vendor lock-in by making tool replacement feasible. This optionality provides negotiating leverage and protects against vendor risk.

How Kissflow supports tool-agnostic workflow design

Kissflow's BPM platform provides capabilities that support flexible workflow design independent of specific tool dependencies.

The platform's visual workflow builder enables defining process logic separately from integration implementations. Native integrations with hundreds of applications provide connection capabilities without hard-coding tool dependencies. And the platform's abstraction approach means that integration changes don't necessarily require process redesign.

For process owners seeking process stability despite technology evolution, Kissflow provides a workflow foundation designed for change resilience.

Frequently asked questions

1. What are tool-agnostic workflows and why are they important?

Tool-agnostic workflows are processes designed around business logic rather than specific platform features, so technology changes become manageable transitions rather than organizational crises. When processes depend on specific platform capabilities, they fail when those capabilities disappear or change. Organizations now manage over 100 SaaS applications with continuous consolidation, vendor acquisitions, and new tool adoption. Each technology change creates process risk. Process stability depending on technology stability is inherently fragile. Modern business cannot guarantee technology stability, so processes must be designed for flexibility.

2. What architectural principles enable flexible workflow design that survives tool changes?

Core principles include: abstraction separating what processes accomplish from how they accomplish it, loose coupling minimizing dependencies between process components and specific tools, standard interfaces using consistent data formats regardless of underlying implementations, and configuration over customization preferring adjustable parameters to hard-coded implementations. These principles enable changing underlying tools without changing business logic. Layered architecture separates stable business logic, orchestration, integration, and presentation layers so technology changes affect only relevant layers.

3. How do I reduce tool dependency in my existing workflows?

Document business requirements independently from implementation using business terms rather than system-specific language. Design data models abstractly around business entities rather than mirroring specific system structures. Use middleware for integrations routing through abstraction layers rather than building point-to-point connections. Avoid deep feature dependencies on unique platform capabilities, preferring capabilities available across multiple platforms. Plan for migration from the start by documenting dependencies, creating abstraction layers, and maintaining awareness of alternative implementations.

4. How do I balance tool independence with leveraging unique platform capabilities?

Apply differentiated approaches based on process importance: high-risk processes performing critical functions should prioritize tool independence, accepting capability limitations for stability. Competitive differentiation processes may warrant deeper tool integration when unique capabilities provide a significant advantage, accepting migration risk for competitive benefit. Commodity processes performing standard functions should maximize portability. Innovation processes may accept higher tool dependency during experimentation with plans to abstract if experiments become permanent. Not every process needs absolute abstraction.

5. How do I test whether my processes are truly tool-independent?

Conduct migration scenario planning, walking through hypothetical tool changes to identify dependencies complicating transitions. Perform integration audits, inventorying all connections between processes and underlying systems to identify tight couplings. Complete feature dependency analysis mappin,g which capabilities depend on specific tool features versus generally available functionality. Execute recovery testing validating that processes could be rebuilt in alternative tools within acceptable timeframes, confirming documentation and abstraction sufficiency. Regular testing reveals vulnerabilities before actual tool changes create urgent needs.

Discover how Kissflow enables process designs that survive technology evolution.

Related Topics

Related Articles

-Efforts.png?width=352&name=10-Best-Practices-to-Refine-Your-Business%20Process-Management-(BPM)-Efforts.png)